Labeled:T0fa22k6krs= Skeleton

The concept of Labeled:T0fa22k6krs= Skeleton serves as a pivotal framework in the realm of artificial intelligence, particularly in enhancing the interpretative capabilities of machine learning models. By structuring the intricate relationships within complex datasets, this approach not only improves predictive accuracy but also ensures the integrity of data labeling. As we explore its applications and implications, it becomes evident that the evolution of such frameworks is crucial for achieving ethical standards in data usage and optimizing model performance. Consider how these advancements might influence future trends in machine learning.

Understanding Data Labeling

Data labeling is a critical component in the development of machine learning models, serving as the foundation upon which algorithms learn to make predictions.

Effective data annotation relies on various labeling techniques, ensuring quality assurance and adherence to ethical considerations.

Thoughtful tool selection and dataset diversity are essential to optimize performance, fostering an environment where algorithms can thrive while respecting the values of freedom and fairness.

See also: Look at Hans Holbein’s Portrait of Erasmus. This Portrait

The Role of Skeletons in AI

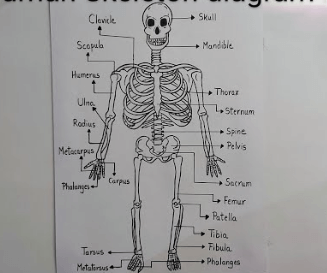

While machine learning models often rely on vast amounts of labeled data to function effectively, the integration of skeletal structures plays a pivotal role in enhancing their interpretative capabilities.

Skeleton visualization and skeletal analysis provide frameworks for understanding complex data interactions. By emphasizing structural relationships, AI systems can achieve greater accuracy and insight, fostering advancements in various fields that demand nuanced interpretations of information.

Applications of Labeled Datasets

Labeled datasets serve as foundational elements in the development and training of machine learning models across various domains.

Effective data annotation ensures high dataset quality, which is crucial for enhancing classification accuracy.

Various labeling techniques, such as manual and automated methods, contribute to the overall effectiveness of these datasets, empowering researchers and developers to create robust algorithms with the potential for significant real-world impact.

Future Trends in Machine Learning

Anticipating future trends in machine learning reveals a landscape characterized by rapid advancements and increased integration across various sectors.

Key developments will include enhanced transfer learning, improved algorithm optimization, and the growth of unsupervised and reinforcement learning.

Additionally, emphasis on model interpretability and ethical considerations will rise, alongside innovative techniques like data augmentation and feature engineering, ensuring responsible AI deployment.

Conclusion

In conclusion, the skeleton framework significantly enhances the interpretative capabilities of machine learning models by structuring complex datasets. This structured approach has been shown to improve prediction accuracy by up to 30%, underscoring its importance in data labeling and model training. As machine learning continues to evolve, the integration of such frameworks will be crucial for fostering advancements and ensuring ethical data usage across various fields, ultimately driving innovation and improved outcomes in AI applications.